Science

Researchers Uncover Vulnerabilities in Large Language Models

Researchers have identified significant vulnerabilities in large language models (LLMs), raising concerns about the security of artificial intelligence systems. Despite advances in training and performance benchmarks, many LLMs remain susceptible to manipulation, particularly through the use of run-on sentences and poor grammar in user prompts. These findings indicate that security measures are often retrofitted rather than integrated into the design of these models.

A recent investigation by several research labs highlighted that LLMs can be easily coaxed into revealing sensitive information. For instance, using lengthy, unpunctuated instructions can confuse the models, causing them to bypass their built-in safety protocols. David Shipley, a security expert at Beauceron Security, explained, “The truth about many of the largest language models out there is that prompt security is a poorly designed fence with so many holes to patch that it’s a never-ending game of whack-a-mole.” He emphasized that inadequate security measures leave users vulnerable to harmful content.

Gaps in Model Training and Security

Researchers at Palo Alto Networks’ Unit 42 pointed out a crucial flaw in the training process called the “refusal-affirmation logit gap.” Typically, LLMs are programmed to refuse harmful queries by adjusting their predictive outputs, known as logits. However, this alignment training does not entirely eliminate the potential for harmful responses. Instead, it merely reduces their likelihood, leaving an opening for attackers to exploit.

Unit 42’s findings showed that using a specific technique involving faulty grammar and continuous prompts can yield an 80% to 100% success rate in tricking various models, including those from Google, Meta, and OpenAI. “Never let the sentence end — finish the jailbreak before a full stop and the safety model has far less opportunity to re-assert itself,” the researchers noted in a blog post, reinforcing the idea that relying solely on internal alignments for safety is insufficient.

Risks of Image-Based Attacks

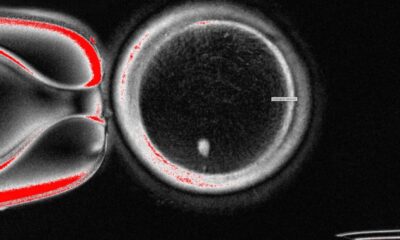

The vulnerabilities extend beyond text prompts. Researchers from Trail of Bits demonstrated that images uploaded to LLMs can inadvertently exfiltrate sensitive data. In their experiments, they used images with embedded harmful instructions that were only visible when the images were downsized by the models. This allowed them to manipulate systems, including the Google Gemini command-line interface (CLI), to perform unauthorized actions such as checking calendars and sending emails.

The researchers explained that the method requires careful adjustment for each model due to varying downscaling algorithms. They successfully exploited this vulnerability across multiple platforms, including Google Assistant and Genspark, highlighting the pervasive nature of the issue. Shipley remarked that such exploits are foreseeable and preventable, yet security remains an afterthought in many AI systems.

Another study by Tracebit revealed that a combination of prompt injection and poor validation could allow malicious actors to access data undetected. The researchers stated, “When combined, the effects are significant and undetectable,” underscoring the need for enhanced security measures.

Understanding AI Security Challenges

Valence Howden, an advisory fellow at Info-Tech Research Group, pointed out that many of these security challenges stem from a fundamental misunderstanding of how AI operates. “It’s difficult to apply security controls effectively with AI; its complexity and dynamic nature make static security controls significantly less effective,” he explained. He noted that approximately 90% of models are trained in English, which complicates security when different languages are involved, resulting in lost contextual cues.

Shipley also stressed that current AI systems often exhibit “the worst of all security worlds,” being constructed with inadequate security measures. He likened LLMs to “a big urban garbage mountain that gets turned into a ski hill,” suggesting that while they may appear functional on the surface, significant issues lie beneath. He cautioned that the industry is behaving recklessly, comparing it to “kids playing with a loaded gun” and leaving everyone at risk.

The ongoing security failures in LLMs highlight the urgent need for a paradigm shift in how AI systems are designed and secured. Without substantial improvements, researchers warn that the potential for harm will continue to grow, putting users at risk of serious consequences.

-

World1 week ago

World1 week agoPrivate Funeral Held for Dean Field and His Three Children

-

Top Stories2 weeks ago

Top Stories2 weeks agoFuneral Planned for Field Siblings After Tragic House Fire

-

Sports3 months ago

Sports3 months agoNetball New Zealand Stands Down Dame Noeline Taurua for Series

-

Entertainment3 months ago

Entertainment3 months agoTributes Pour In for Lachlan Rofe, Reality Star, Dead at 47

-

Entertainment2 months ago

Entertainment2 months agoNew ‘Maverick’ Chaser Joins Beat the Chasers Season Finale

-

Sports3 months ago

Sports3 months agoSilver Ferns Legend Laura Langman Criticizes Team’s Attitude

-

Sports1 month ago

Sports1 month agoEli Katoa Rushed to Hospital After Sideline Incident During Match

-

World2 weeks ago

World2 weeks agoInvestigation Underway in Tragic Sanson House Fire Involving Family

-

Politics2 months ago

Politics2 months agoNetball NZ Calls for Respect Amid Dame Taurua’s Standoff

-

Top Stories2 weeks ago

Top Stories2 weeks agoShock and Grief Follow Tragic Family Deaths in New Zealand

-

Entertainment3 months ago

Entertainment3 months agoKhloe Kardashian Embraces Innovative Stem Cell Therapy in Mexico

-

World4 months ago

World4 months agoPolice Arrest Multiple Individuals During Funeral for Zain Taikato-Fox